前面我们已经学习了HBase的安装以及HBase的Shell命令

0、链接问题

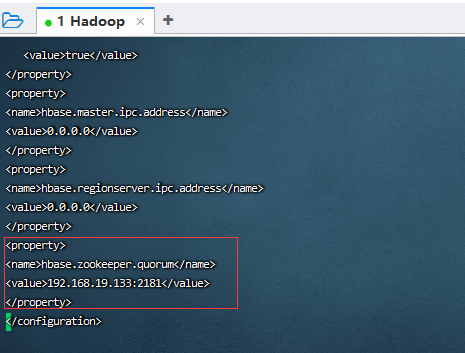

我们在使用java操作hbase的时候会出现一个错误,这个问题是在因为hbase是集群的且通过zookeeper进行注册管理的,我们在连接hbase时,只需要知道zookeeper地址,

然后通过zookeeper获取hbase其他节点的相关数据,废话少说,上解决方案:

配置hbase 配置中的hbase-site.xml

加入配置:

<property>

<name>hbase.zookeeper.quorum</name>

<value>192.168.19.133:2181</value>

</property>

如图:

其中192.168.19.133是虚拟机的地址

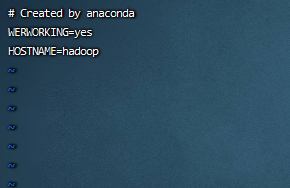

vi /etc/sysconfig/network 编辑network配置

输入如下配置

WERWORKING=yes

HOSTNAME=hadoop

如图:

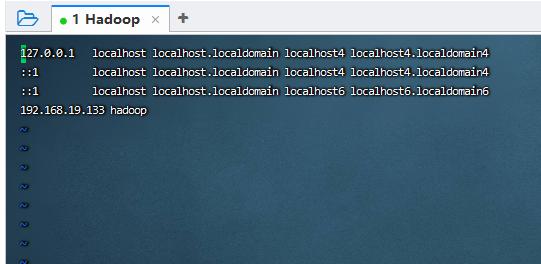

vi /etc/hosts 编辑hosts

输入如下配置:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.19.133 hadoop

如图:

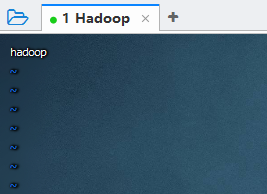

vi /etc/hostname 编辑hostname

输入:

hadoop

如图:

(该配置需要重启系统,输入reboot后生效)

通过

hostnamectl set-hostname hadoop 设置hostname 改设置无需重启,只需要通过打开新的页面会话即可

在windows端修改host配置

修改C:\Windows\System32\drivers\etc目录下的hosts配置

输入:192.168.19.133 hadoop

或者可以通过switchhost来进行修改

接下来我们通过了java api来操作HBase

<2022-05-05 16:39 start>

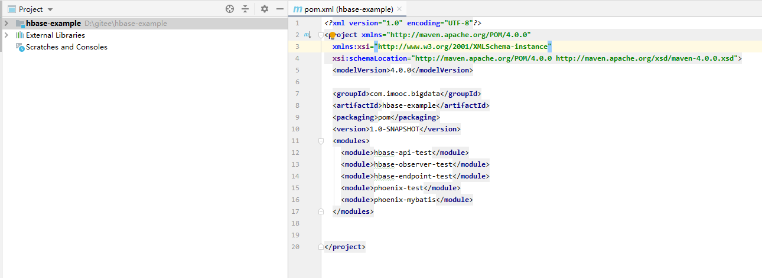

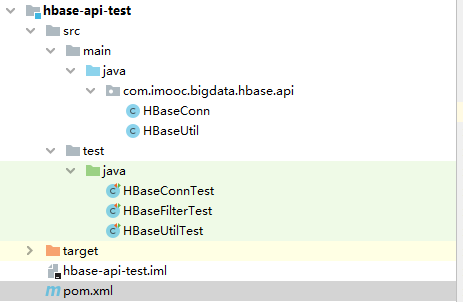

主项目截图:

、

、

主项目pom文件:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.imooc.bigdata</groupId>

<artifactId>hbase-example</artifactId>

<packaging>pom</packaging>

<version>1.0-SNAPSHOT</version>

<modules>

<module>hbase-api-test</module>

<module>hbase-observer-test</module>

<module>hbase-endpoint-test</module>

<module>phoenix-test</module>

<module>phoenix-mybatis</module>

</modules>

</project><2022-05-05 16:39 end>

1、搭建maven项目(推荐使用idea搭建项目)

相关的pom.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>hbase-example</artifactId>

<groupId>com.imooc.bigdata</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>hbase-api-test</artifactId>

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.2.4</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

</dependencies>

</project>2、完整的项目截图:

3、HBaseConn:

package com.imooc.bigdata.hbase.api;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Table;

public class HBaseConn {

private static final HBaseConn INSTANCE = new HBaseConn();

private static Configuration configuration;

private static Connection connection;

private HBaseConn() {

try {

if (configuration == null) {

configuration = HBaseConfiguration.create();

configuration.set("hbase.zookeeper.quorum", "192.168.19.133:2181");

}

} catch (Exception e) {

e.printStackTrace();

}

}

private Connection getConnection() {

if (connection == null || connection.isClosed()) {

try {

connection = ConnectionFactory.createConnection(configuration);

} catch (Exception e) {

e.printStackTrace();

}

}

return connection;

}

public static Connection getHBaseConn() {

return INSTANCE.getConnection();

}

public static Table getTable(String tableName) throws IOException {

return INSTANCE.getConnection().getTable(TableName.valueOf(tableName));

}

public static void closeConn() {

if (connection != null) {

try {

connection.close();

} catch (IOException ioe) {

ioe.printStackTrace();

}

}

}

}

4、HBaseUtil:

package com.imooc.bigdata.hbase.api;

import java.io.IOException;

import java.util.Arrays;

import java.util.List;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.util.Bytes;

import org.omg.CORBA.PUBLIC_MEMBER;

public class HBaseUtil {

/**

* 创建HBase表.

*

* @param tableName 表名

* @param cfs 列族的数组

* @return 是否创建成功

*/

public static boolean createTable(String tableName, String[] cfs) {

try (HBaseAdmin admin = (HBaseAdmin) HBaseConn.getHBaseConn().getAdmin()) {

if (admin.tableExists(tableName)) {

return false;

}

HTableDescriptor tableDescriptor = new HTableDescriptor(TableName.valueOf(tableName));

Arrays.stream(cfs).forEach(cf -> {

HColumnDescriptor columnDescriptor = new HColumnDescriptor(cf);

columnDescriptor.setMaxVersions(1);

tableDescriptor.addFamily(columnDescriptor);

});

admin.createTable(tableDescriptor);

} catch (Exception e) {

e.printStackTrace();

}

return true;

}

/**

* 删除hbase表.

*

* @param tableName 表名

* @return 是否删除成功

*/

public static boolean deleteTable(String tableName) {

try (HBaseAdmin admin = (HBaseAdmin) HBaseConn.getHBaseConn().getAdmin()) {

admin.disableTable(tableName);

admin.deleteTable(tableName);

} catch (Exception e) {

e.printStackTrace();

}

return true;

}

/**

* hbase插入一条数据.

*

* @param tableName 表名

* @param rowKey 唯一标识

* @param cfName 列族名

* @param qualifier 列标识

* @param data 数据

* @return 是否插入成功

*/

public static boolean putRow(String tableName, String rowKey, String cfName, String qualifier,

String data) {

try (Table table = HBaseConn.getTable(tableName)) {

Put put = new Put(Bytes.toBytes(rowKey));

put.addColumn(Bytes.toBytes(cfName), Bytes.toBytes(qualifier), Bytes.toBytes(data));

table.put(put);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return true;

}

public static boolean putRows(String tableName, List<Put> puts) {

try (Table table = HBaseConn.getTable(tableName)) {

table.put(puts);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return true;

}

/**

* 获取单条数据.

*

* @param tableName 表名

* @param rowKey 唯一标识

* @return 查询结果

*/

public static Result getRow(String tableName, String rowKey) {

try (Table table = HBaseConn.getTable(tableName)) {

Get get = new Get(Bytes.toBytes(rowKey));

return table.get(get);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return null;

}

public static Result getRow(String tableName, String rowKey, FilterList filterList) {

try (Table table = HBaseConn.getTable(tableName)) {

Get get = new Get(Bytes.toBytes(rowKey));

get.setFilter(filterList);

return table.get(get);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return null;

}

public static ResultScanner getScanner(String tableName) {

try (Table table = HBaseConn.getTable(tableName)) {

Scan scan = new Scan();

scan.setCaching(1000);

return table.getScanner(scan);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return null;

}

/**

* 批量检索数据.

*

* @param tableName 表名

* @param startRowKey 起始RowKey

* @param endRowKey 终止RowKey

* @return ResultScanner实例

*/

public static ResultScanner getScanner(String tableName, String startRowKey, String endRowKey) {

try (Table table = HBaseConn.getTable(tableName)) {

Scan scan = new Scan();

scan.setStartRow(Bytes.toBytes(startRowKey));

scan.setStopRow(Bytes.toBytes(endRowKey));

scan.setCaching(1000);

return table.getScanner(scan);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return null;

}

public static ResultScanner getScanner(String tableName, String startRowKey, String endRowKey,

FilterList filterList) {

try (Table table = HBaseConn.getTable(tableName)) {

Scan scan = new Scan();

scan.setStartRow(Bytes.toBytes(startRowKey));

scan.setStopRow(Bytes.toBytes(endRowKey));

scan.setFilter(filterList);

scan.setCaching(1000);

return table.getScanner(scan);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return null;

}

/**

* HBase删除一行记录.

*

* @param tableName 表名

* @param rowKey 唯一标识

* @return 是否删除成功

*/

public static boolean deleteRow(String tableName, String rowKey) {

try (Table table = HBaseConn.getTable(tableName)) {

Delete delete = new Delete(Bytes.toBytes(rowKey));

table.delete(delete);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return true;

}

public static boolean deleteColumnFamily(String tableName, String cfName) {

try (HBaseAdmin admin = (HBaseAdmin) HBaseConn.getHBaseConn().getAdmin()) {

admin.deleteColumn(tableName, cfName);

} catch (Exception e) {

e.printStackTrace();

}

return true;

}

public static boolean deleteQualifier(String tableName, String rowKey, String cfName,

String qualifier) {

try (Table table = HBaseConn.getTable(tableName)) {

Delete delete = new Delete(Bytes.toBytes(rowKey));

delete.addColumn(Bytes.toBytes(cfName), Bytes.toBytes(qualifier));

table.delete(delete);

} catch (IOException ioe) {

ioe.printStackTrace();

}

return true;

}

}

5、HBaseConnTest:

import java.io.IOException;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.Table;

import org.junit.Test;

import com.imooc.bigdata.hbase.api.HBaseConn;

public class HBaseConnTest {

@Test

public void getConnTest() {

Connection conn = HBaseConn.getHBaseConn();

System.out.println(conn.isClosed());

HBaseConn.closeConn();

System.out.println(conn.isClosed());

}

@Test

public void getTableTest() {

try {

Table table = HBaseConn.getTable("US_POPULATION");

System.out.println(table.getName().getNameAsString());

table.close();

} catch (IOException ioe) {

ioe.printStackTrace();

}

}

}

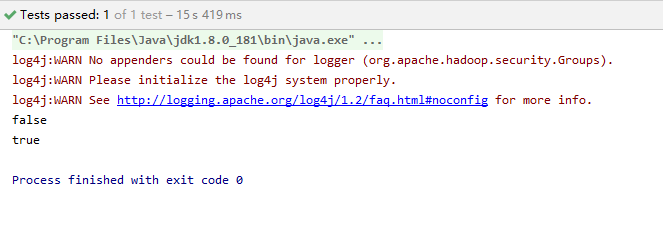

执行getConnTest方法:

执行getTableTest方法:

6、HBaseUtilTest:

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.Test;

import com.imooc.bigdata.hbase.api.HBaseUtil;

public class HBaseUtilTest {

@Test

public void createTable() {

HBaseUtil.createTable("FileTable", new String[]{"fileInfo", "saveInfo"});

}

@Test

public void addFileDetails() {

HBaseUtil.putRow("FileTable", "rowkey1", "fileInfo", "name", "file1.txt");

HBaseUtil.putRow("FileTable", "rowkey1", "fileInfo", "type", "txt");

HBaseUtil.putRow("FileTable", "rowkey1", "fileInfo", "size", "1024");

HBaseUtil.putRow("FileTable", "rowkey1", "saveInfo", "creator", "jixin");

HBaseUtil.putRow("FileTable", "rowkey2", "fileInfo", "name", "file2.jpg");

HBaseUtil.putRow("FileTable", "rowkey2", "fileInfo", "type", "jpg");

HBaseUtil.putRow("FileTable", "rowkey2", "fileInfo", "size", "1024");

HBaseUtil.putRow("FileTable", "rowkey2", "saveInfo", "creator", "jixin");

}

@Test

public void getFileDetails() {

Result result = HBaseUtil.getRow("FileTable", "rowkey1");

if (result != null) {

System.out.println("rowkey=" + Bytes.toString(result.getRow()));

System.out.println("fileName=" + Bytes

.toString(result.getValue(Bytes.toBytes("fileInfo"), Bytes.toBytes("name"))));

}

}

@Test

public void scanFileDetails() {

ResultScanner scanner = HBaseUtil.getScanner("FileTable", "rowkey2", "rowkey2");

if (scanner != null) {

scanner.forEach(result -> {

System.out.println("rowkey=" + Bytes.toString(result.getRow()));

System.out.println("fileName=" + Bytes

.toString(result.getValue(Bytes.toBytes("fileInfo"), Bytes.toBytes("name"))));

});

scanner.close();

}

}

@Test

public void deleteRow() {

HBaseUtil.deleteRow("FileTable", "rowkey1");

}

@Test

public void deleteTable() {

HBaseUtil.deleteTable("FileTable");

}

}

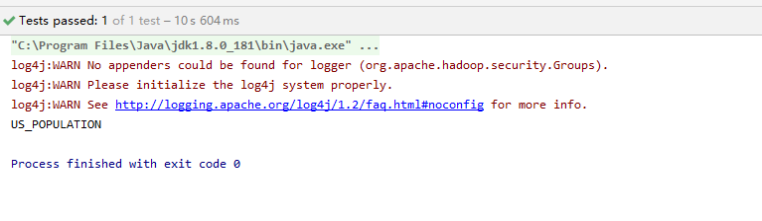

执行getFileDetails(),如图:

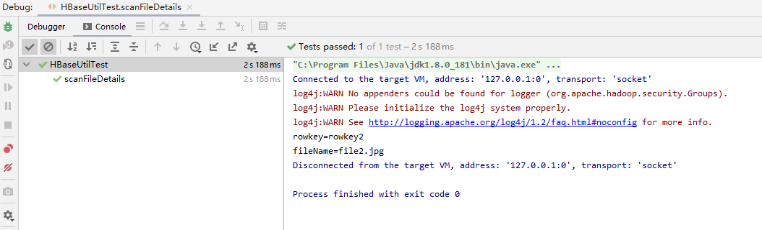

执行scanFileDetails(),如图:

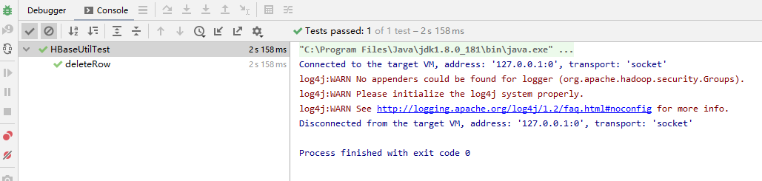

执行deleteRow(),如图:

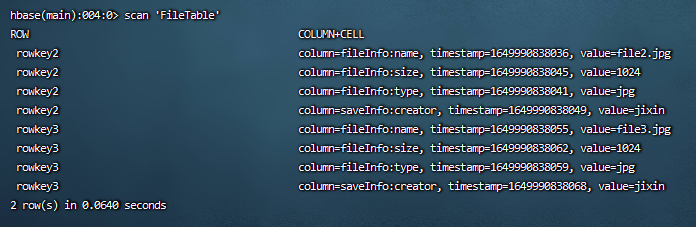

使用scan 'FileTable'查看,如图:

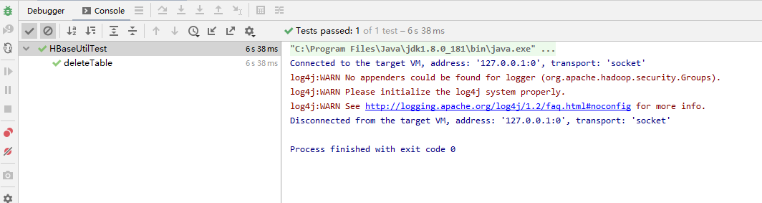

执行deleteTable(),如图:

使用list查看,如图:

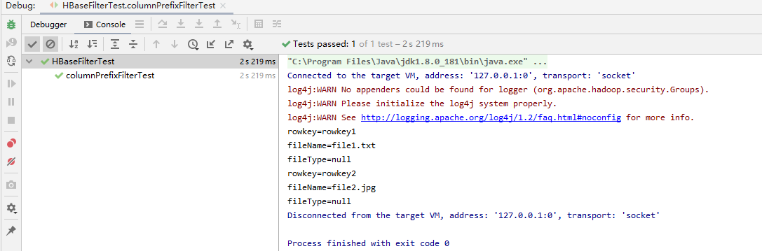

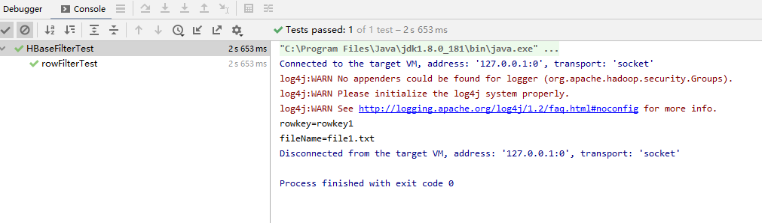

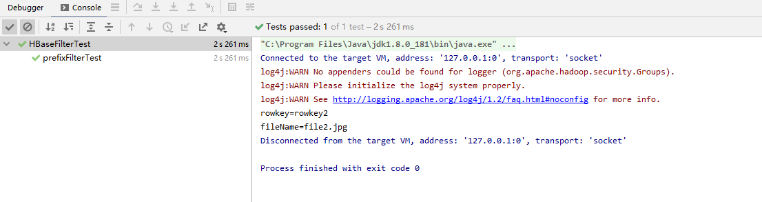

7、HBaseFilterTest:

import java.util.Arrays;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.filter.BinaryComparator;

import org.apache.hadoop.hbase.filter.ColumnPrefixFilter;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.FilterList.Operator;

import org.apache.hadoop.hbase.filter.KeyOnlyFilter;

import org.apache.hadoop.hbase.filter.PageFilter;

import org.apache.hadoop.hbase.filter.PrefixFilter;

import org.apache.hadoop.hbase.filter.RowFilter;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.Test;

import com.imooc.bigdata.hbase.api.HBaseUtil;

public class HBaseFilterTest {

@Test

public void createTable() {

HBaseUtil.createTable("FileTable", new String[]{"fileInfo", "saveInfo"});

}

@Test

public void addFileDetails() {

HBaseUtil.putRow("FileTable", "rowkey1", "fileInfo", "name", "file1.txt");

HBaseUtil.putRow("FileTable", "rowkey1", "fileInfo", "type", "txt");

HBaseUtil.putRow("FileTable", "rowkey1", "fileInfo", "size", "1024");

HBaseUtil.putRow("FileTable", "rowkey1", "saveInfo", "creator", "jixin");

HBaseUtil.putRow("FileTable", "rowkey2", "fileInfo", "name", "file2.jpg");

HBaseUtil.putRow("FileTable", "rowkey2", "fileInfo", "type", "jpg");

HBaseUtil.putRow("FileTable", "rowkey2", "fileInfo", "size", "1024");

HBaseUtil.putRow("FileTable", "rowkey2", "saveInfo", "creator", "jixin");

HBaseUtil.putRow("FileTable", "rowkey3", "fileInfo", "name", "file3.jpg");

HBaseUtil.putRow("FileTable", "rowkey3", "fileInfo", "type", "jpg");

HBaseUtil.putRow("FileTable", "rowkey3", "fileInfo", "size", "1024");

HBaseUtil.putRow("FileTable", "rowkey3", "saveInfo", "creator", "jixin");

}

@Test

public void rowFilterTest() {

Filter filter = new RowFilter(CompareOp.EQUAL, new BinaryComparator(Bytes.toBytes("rowkey1")));

FilterList filterList = new FilterList(Operator.MUST_PASS_ONE, Arrays.asList(filter));

ResultScanner scanner = HBaseUtil

.getScanner("FileTable", "rowkey1", "rowkey3", filterList);

if (scanner != null) {

scanner.forEach(result -> {

System.out.println("rowkey=" + Bytes.toString(result.getRow()));

System.out.println("fileName=" + Bytes

.toString(result.getValue(Bytes.toBytes("fileInfo"), Bytes.toBytes("name"))));

});

scanner.close();

}

}

@Test

public void prefixFilterTest() {

Filter filter = new PrefixFilter(Bytes.toBytes("rowkey2"));

FilterList filterList = new FilterList(Operator.MUST_PASS_ALL, Arrays.asList(filter));

ResultScanner scanner = HBaseUtil

.getScanner("FileTable", "rowkey1", "rowkey3", filterList);

if (scanner != null) {

scanner.forEach(result -> {

System.out.println("rowkey=" + Bytes.toString(result.getRow()));

System.out.println("fileName=" + Bytes

.toString(result.getValue(Bytes.toBytes("fileInfo"), Bytes.toBytes("name"))));

});

scanner.close();

}

}

@Test

public void keyOnlyFilterTest() {

Filter filter = new KeyOnlyFilter(true);

FilterList filterList = new FilterList(Operator.MUST_PASS_ALL, Arrays.asList(filter));

ResultScanner scanner = HBaseUtil

.getScanner("FileTable", "rowkey1", "rowkey3", filterList);

if (scanner != null) {

scanner.forEach(result -> {

System.out.println("rowkey=" + Bytes.toString(result.getRow()));

System.out.println("fileName=" + Bytes

.toString(result.getValue(Bytes.toBytes("fileInfo"), Bytes.toBytes("name"))));

});

scanner.close();

}

}

@Test

public void columnPrefixFilterTest() {

Filter filter = new ColumnPrefixFilter(Bytes.toBytes("nam"));

FilterList filterList = new FilterList(Operator.MUST_PASS_ALL, Arrays.asList(filter));

ResultScanner scanner = HBaseUtil

.getScanner("FileTable", "rowkey1", "rowkey3", filterList);

if (scanner != null) {

scanner.forEach(result -> {

System.out.println("rowkey=" + Bytes.toString(result.getRow()));

System.out.println("fileName=" + Bytes

.toString(result.getValue(Bytes.toBytes("fileInfo"), Bytes.toBytes("name"))));

System.out.println("fileType=" + Bytes

.toString(result.getValue(Bytes.toBytes("fileInfo"), Bytes.toBytes("type"))));

});

scanner.close();

}

}

}

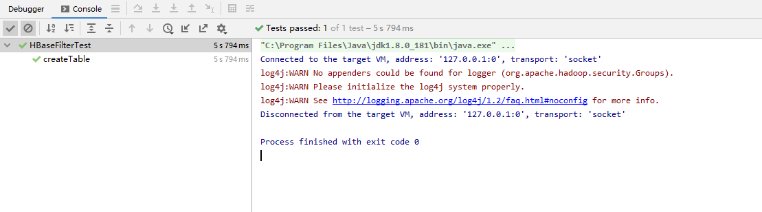

执行createTable:

如图:

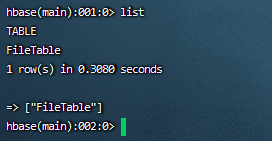

我们通过进入hbase的bin目录下,使用./hbase shell 命令

进入habse的shell端使用list查看

如图:

这样FileTable表就被创建出来了

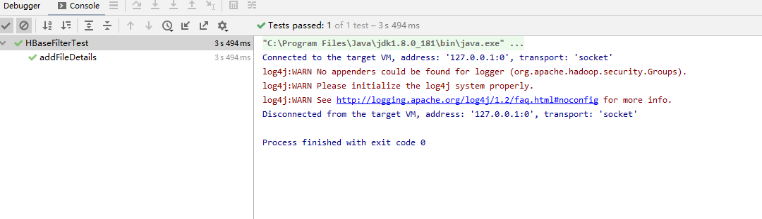

执行addFileDetails()

如图:

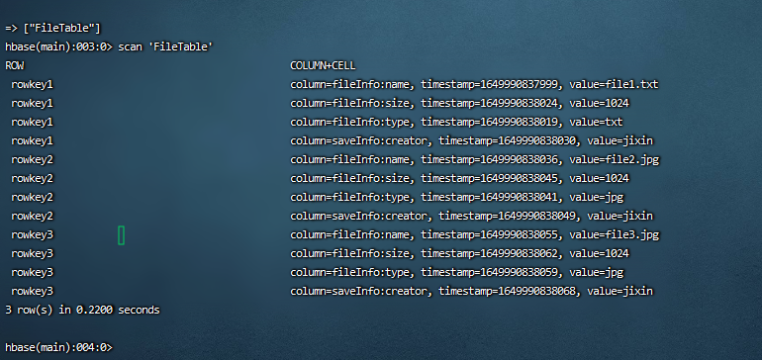

使用scan 'FileTable' 查看,如图:

这样数据就添加进去了

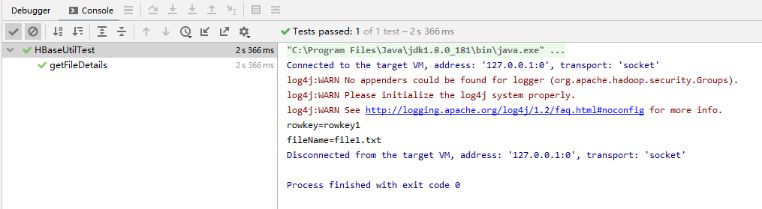

执行rowFilterTest(),如图:

执行prefixFilterTest(),如图:

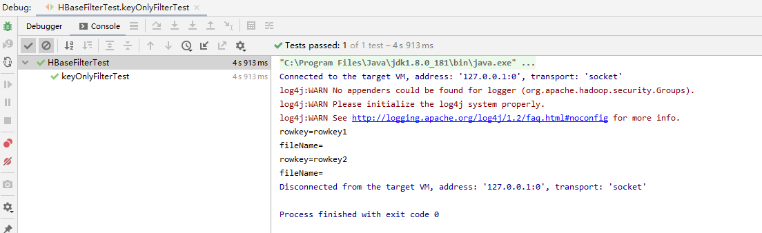

执行keyOnlyFilterTest(),如图:

执行columnPrefixFilterTest(),如图: