这可能是kubesphere最详细的使用教程(持续更新中)_哔哩哔哩_bilibili

k8s的安装参考:http://www.haijin.xyz/list/article/486

| KubeSphere 版本 | 支持的 Kubernetes 版本范围 | 来源 |

|---|---|---|

| v3.0.0 | 1.15.x, 1.16.x, 1.17.x, 1.18.x | |

| v3.1.x | v1.17.x, v1.18.x, v1.19.x, v1.20.x | |

| v3.2.x | v1.19.x, v1.20.x, v1.21.x, v1.22.x (实验性支持) | |

| v3.3.x | 1.19.x, 1.20.x, 1.21.x, 1.22.x (实验性支持) | |

| v3.4.x | 1.22.x - 1.27.2 | |

| v4.1.3 | v1.21~v1.30 (经测试支持) |

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

# 如果上面命令下载不了,可以使用这个

wget https://kubernetes.pek3b.qingstor.com/kubekey/releases/download/v3.0.7/kubekey-v3.0.7-linux-amd64.tar.gz

./kk create config --with-kubesphere v3.3.2

KubeKey 将默认安装 Kubernetes v1.23.10

./kk -h

./kk create -h

./kk create config -h

# 查看支持的版本

./kk version --show-supported-k8s

# 修改配置文件

config-sample.yaml

根据配置文件创建集群

./kk create cluster -f config-sample.yaml

三个节点都得安装

yum install -y conntrack socat

config-sample.yaml国内的配置:

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

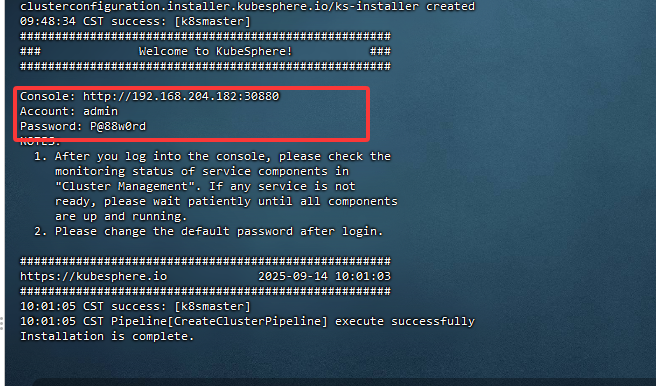

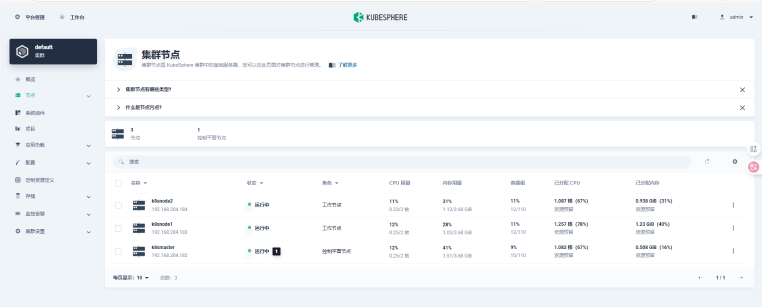

- {name: k8smaster, address: 192.168.204.182, internalAddress: 192.168.204.182, user: root, password: "liuhaijin"}

- {name: k8snode1, address: 192.168.204.183, internalAddress: 192.168.204.183, user: root, password: "liuhaijin"}

- {name: k8snode2, address: 192.168.204.184, internalAddress: 192.168.204.184, user: root, password: "liuhaijin"}

roleGroups:

etcd:

- k8smaster

control-plane:

- k8smaster

worker:

- k8snode1

- k8snode2

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: "" # 单 master 模式,留空

port: 6443

kubernetes:

version: v1.23.10

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

# ✅ 添加国内镜像源

images:

kubeImageRepo: registry.cn-hangzhou.aliyuncs.com/google_containers

etcdImageRepo: registry.cn-hangzhou.aliyuncs.com/google_containers

pauseImageRepo: registry.cn-hangzhou.aliyuncs.com/google_containers/pause

coreDNSImageRepo: coredns/coredns

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

# ✅ 添加 Docker 国内镜像加速

registryMirrors:

- https://registry.cn-hangzhou.aliyuncs.com

- https://docker.mirrors.ustc.edu.cn

- https://hub-mirror.c.163.com

insecureRegistries: []

addons: []

---

# KubeSphere 安装配置(保持不变)

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.3.2

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

zone: ""

local_registry: ""

namespace_override: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

redis:

enabled: false

volumeSize: 2Gi

openldap:

enabled: false

volumeSize: 2Gi

minio:

volumeSize: 20Gi

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

GPUMonitoring:

enabled: false

gpu:

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es:

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchHost: ""

externalElasticsearchPort: ""

alerting:

enabled: false

auditing:

enabled: false

devops:

enabled: false

jenkinsMemoryLim: 8Gi

jenkinsMemoryReq: 4Gi

jenkinsVolumeSize: 8Gi

events:

enabled: false

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

metrics_server:

enabled: false

monitoring:

storageClass: ""

node_exporter:

port: 9100

gpu:

nvidia_dcgm_exporter:

enabled: false

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

istio:

components:

ingressGateways:

- name: istio-ingressgateway

enabled: false

cni:

enabled: false

edgeruntime:

enabled: false

kubeedge:

enabled: false

cloudCore:

cloudHub:

advertiseAddress:

- ""

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

iptables-manager:

enabled: true

mode: "external"

terminal:

timeout: 600优雅的关机脚本cluster-shutdown.sh :

#!/bin/bash

# 简化版一键关闭脚本(使用密码)

USERNAME="root"

PASSWORD="liuhaijin"

NODES=("k8snode1" "k8snode2" "k8smaster")

# 安装sshpass

if ! command -v sshpass &> /dev/null; then

echo "安装sshpass..."

sudo apt-get install -y sshpass || sudo yum install -y sshpass

fi

# SSH带密码执行函数

ssh_cmd() {

sshpass -p "$PASSWORD" ssh -o StrictHostKeyChecking=no $USERNAME@$1 "$2"

}

echo "开始关闭Kubernetes集群..."

# 驱逐所有节点

for node in "${NODES[@]}"; do

echo "驱逐节点: $node"

kubectl cordon $node

kubectl drain $node --ignore-daemonsets --delete-emptydir-data --force --timeout=60s

done

# 关闭所有节点

for node in "${NODES[@]}"; do

echo "关闭节点: $node"

ssh_cmd $node "echo '$PASSWORD' | sudo -S shutdown -h now" &

done

wait

echo "✅ 关机指令已发送到所有节点"

再次启动:./kk create cluster -f config-sample.yaml

清理集群:./kk delete cluster -f config-sample.yaml

如果因为关机导致集群挂了,可以使用k8s-cleanup.sh来清理集群:

#!/bin/bash

echo "开始清理Kubernetes 1.18.0集群..."

# 检查是否为root用户

if [ "$EUID" -ne 0 ]; then

echo "请使用root用户运行此脚本"

exit 1

fi

# 停止所有工作负载

echo "1. 停止所有工作负载..."

kubectl delete all --all --all-namespaces 2>/dev/null || true

kubectl delete pvc --all --all-namespaces 2>/dev/null || true

kubectl delete pv --all 2>/dev/null || true

# 重置kubeadm

echo "2. 重置kubeadm..."

kubeadm reset --force 2>/dev/null || true

# 停止服务

echo "3. 停止相关服务..."

systemctl stop kubelet 2>/dev/null || true

systemctl stop docker 2>/dev/null || true

# 卸载软件包

echo "4. 卸载Kubernetes组件..."

yum remove -y kubelet kubeadm kubectl 2>/dev/null || true

# 清理文件

echo "5. 清理配置文件..."

rm -rf /etc/kubernetes

rm -rf /var/lib/kubelet

rm -rf /var/lib/etcd

rm -rf /etc/cni/net.d

rm -rf /opt/cni/bin

rm -rf /var/lib/flannel

rm -rf ~/.kube

# 清理Docker

echo "6. 清理Docker..."

docker rm -f $(docker ps -aq) 2>/dev/null || true

docker rmi -f $(docker images -aq) 2>/dev/null || true

docker system prune -a --volumes -f 2>/dev/null || true

rm -rf /var/lib/docker

# 清理网络

echo "7. 清理网络配置..."

iptables -F

iptables -t nat -F

iptables -t mangle -F

iptables -X

ipvsadm -C 2>/dev/null || true

# 清理YUM源

echo "8. 清理YUM源..."

rm -f /etc/yum.repos.d/kubernetes.repo

rm -f /etc/yum.repos.d/docker-ce.repo

yum clean all

# 禁用服务

echo "9. 禁用服务..."

systemctl disable kubelet 2>/dev/null || true

systemctl disable docker 2>/dev/null || true

systemctl daemon-reload

echo "清理完成!建议重启系统以确保所有更改生效。"

echo "运行 'reboot' 命令重启系统。"